The entropy of a source is the average information content from all the possible messages. In the example of drawing a card from a 52-card deck, each card has the same chance of being drawn. Since each outcome is equally likely, the entropy of this source is also 5.70044 bits. However, the information content from most sources is more likely to vary from message to message. Information content can vary because some messages may have a better chance of being sent than others have. Messages that are unlikely convey the most information, while messages that are highly probable convey less information.

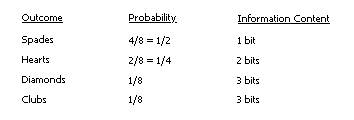

The following example demonstrates the entropy of a source when the outcomes are not equally likely. Consider a deck of 8 cards, consisting of 4 spades, 2 hearts, 1 diamond, and 1 club. The probability of each suit being drawn at random is no longer equal. The probability and the corresponding information content of drawing a given card are listed in the following table:

|

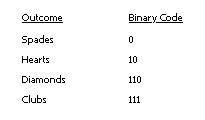

In this case the entropy, or average information content, of the system is exactly: (1/2 × 1) + (1/4 × 2) + (1/8 × 3) + (1/8 × 3) = 1.75 bits. Even though there are four possible messages, some are more likely than others, so it actually takes less than two bits to specify the information content of this system. To see how to represent this particular source using an average of 1.75 bits, consider the following binary code:

|

Here the most likely outcome (spades) is represented by a short code and the least likely outcomes are represented by longer codes. Mathematically, the average is exactly 1.75 bits, the source entropy. Information theory states that no further improvement is possible.

2015-08-21

2015-08-21 328

328