Information theory provides a method for determining exactly how many bits are required to specify a given message to a given precision. This method is called the theory of data compression or, more technically, rate distortion theory. Often the message selected at the source need not (or cannot) be transmitted perfectly to the destination. For example, in a telephone conversation, extremely high sound quality is not necessary. It is usually sufficient that the two parties recognize and understand each other. Similarly, if the message is a scientific measurement—for example, a measurement of the number = 3.14159265…—it is not possible to transmit all of the digits in a finite amount of time. However, a useful approximation of can be transmitted with a relatively small number of bits.

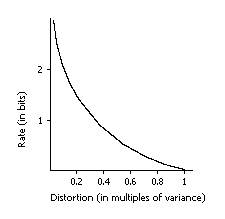

The general idea of data compression theory is illustrated in the graph below. The horizontal axis measures the distortion, or imprecision, that is tolerable in a given message. The vertical axis gives the minimum possible number of bits required to specify the message with this distortion. The graph shows that as the acceptable distortion becomes smaller and smaller, the required number of bits becomes larger and larger. Conversely, as the allowed distortion becomes larger, the required number of bits decreases. Ultimately, the number of required bits becomes zero. The number becomes equal to zero when the allowed distortion can be achieved by merely guessing at the message.

|

Consider the following simple example. If the message is the outcome of the toss of an ordinary coin and it is acceptable to be wrong 50 percent of the time, then no bits are required. In this case, one can simply guess heads and be sure of being right 50 percent of the time, on average.

The relationship shown by the rate-distortion curve represents the absolute minimum number of bits, on the average, required to represent a message with a given distortion. The exact shape of the curve depends on the details of the particular situation. Shannon’s Fundamental Theorem of Data Compression states that, in principle, it is possible to compress a message to a given level, but no more. Exactly how this compression should be done he did not say, but later researchers developed practical techniques for data compression based on Shannon’s theorems. These techniques use mathematical algorithms, or repeated computations, to compress data. Many of these methods come very close to achieving the ultimate limits set forth by information theory.

2015-08-21

2015-08-21 326

326