Information theory is often used to design communication systems. An important property of a transmission system is its channel capacity. Sometimes known as the Shannon limit, channel capacity is a measure of the ultimate speed or rate at which a channel can transmit information reliably. The capacity of a particular system can be approached but never exceeded.

Channel capacity is measured in bits per second for a transmission channel and in bits per centimeter (or per square centimeter) for storage channels. Information theory says that if the transmitter is properly designed, information can be transmitted perfectly reliably at any speed up to the channel’s capacity. However, if capacity is exceeded, then inevitably the message will be received at the destination with errors.

One factor that decreases capacity is noise, or unwanted information. Most communication channels introduce some amount of noise, such as radio static, into the message. Noise interferes with messages and often causes errors to occur during transmission. For example, a noisy channel that is used to transmit zeros and ones might occasionally change a zero into a one or a one into a zero. In such a case, the message 10001 might be received as 11001. Information theory provides a way to measure the severity of the noise by measuring the capacity of a channel.

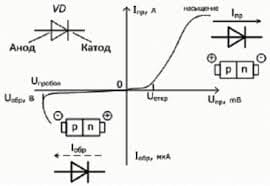

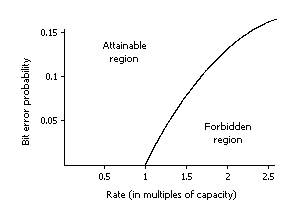

The graph below shows the fraction of received bits that are erroneous as a function of the transmission rate, measured in multiples of capacity. For example, if the capacity is 1,000 bits per second, a rate of 0.5 equals a transmission speed of 500 bits per second. The surprising thing about the graph below is that the fraction of bits that are erroneous need not increase as the transmission speed increases until capacity is reached. However, once capacity is exceeded, errors are inevitable. For example, at a speed of 99 percent of capacity, no errors have to occur, but at twice capacity at least 11 percent of the bits will be erroneous, no matter how well the system is designed.

|

However, even when transmission rates are below capacity, noise usually introduces some errors. To combat the effects of noise, engineers strive to build systems that will transmit messages close to capacity over a noisy channel without introducing errors. To do so, they use error-correcting coding. If the information source is a random sequence of bits, a device in the transmitter called the encoder uses these data bits to carefully calculate a number of check bits. The idea is that when the data bits and the check bits are combined, a strong pattern appears. The data bits and the check bits are then transmitted together over the noisy channel. The combination of data bits and check bits is usually called a code word.

Noise in the channel may corrupt both the data bits and the check bits. However, Shannon showed that if the check bits are computed in just the right way, the pattern in the code word will be so strong that the pattern will almost always be recognizable despite the channel noise. A pattern-recognizing device, which is called a decoder, forms part of the receiver. Its job is to reconstruct the original data, using the noisy data and the noisy check bits as clues.

Shannon did not show how the encoder and decoder should be designed; he only showed that the use of check digits is possible. Later generations of mathematicians and engineers went on to design practical error-correcting systems so that it is now possible to communicate reliably at speeds near capacity.

2015-08-21

2015-08-21 357

357